For my Data Rep final, I am comparing the position of Mariah Carey’s hand to the note that she is singing and re-imagining her melodies as if played by an invisible theremin. See here for my initial post.

Work has been coming along well. This past week, I completed my two data collection tasks: track her hand and identify frames in which she begins singing a note.

Stabilizing the Video Clip

To get the most accurate hand positions I could from the clip, I had to remove any trace of camera movement. This was pretty tough, but I think I did a good job. To remove the camera’s X and Y axis motion, I tracked the tiny tuft of hair in the middle of her head using After Effects (failed tracking points included her right eye, the point where her dress meets her left thigh, and her right nostril) and adjusted the video to keep its position constant. The hair tuft turned out to be a good choice because when it came to removing Z axis motion, all I had to do was adjust the scale of the video using the tuft as my anchor point and her chin as a reference point. There are few enough zooms and dollies where I could do this step without needing the After Effects tracker. That video looks like this:

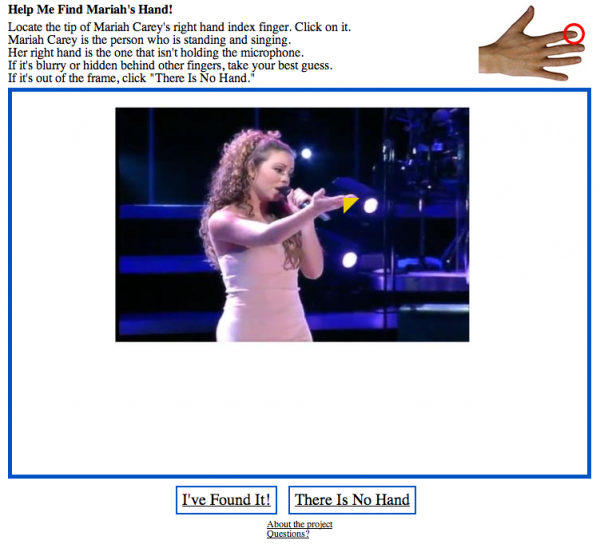

Tracking the Hand

Next, I needed to track her hand. Doing this with the After Effects tracker proved too time-consuming and error-prone, so in my Ultimate Hat Tip to White Glove Tracking, I crowdsourced this step (remember crowdsourcing?). Using CakePHP, I built a website that loaded each frame into the browser and asked the viewer to click on Mariah’s right hand index finger tip. Within a few hours, my database was full of tracking points.

Finding the Notes

So I know which tracking points to sample, I needed to find the frames on which Mariah starts (and, sometimes, ends) notes. There are probably some neat ways to do this using audio analysis tools or mathematically evaluating the tracked points, but I did it the old fashioned way, by scrubbing through the video and hearing it for myself.

The Data

If you’ve read this far, you deserve a prize. Here you go! All the data I’ve collected. This will probably change before the end of the project, primarily because I need to interpolate her hand position based on frames where her hand isn’t visible. There’s an important block at the end of the clip where her hand dips below the frame so I need to figure out what it should be. I would also like to go back and analyze her lyrics to pick up more trills (is that the right term? Maybe I should just call them “Mariah Warbles”) from the audio.

In the “word” column of the data, you’ll notice some words are duplicated across lines and others disappear and reappear a few frames down. The duplicated ones are for glissandi, or slides between notes. The others should just be treated as regular notes.

That’s all for now! There’s so much fun that could be had with this data that is out of my project’s scope, so I really hope other people use it. Next steps for me will be calculating new pitches, creating new sheet music and handing it off to a singer to learn.